"I Survived. I Lived. Then I Woke Up."

Do Polite Prompts Make Smarter AIs? What a New Cross-Lingual Study Really Found

A cross-lingual study tested eight “politeness levels” in prompts (English, Chinese, Japanese) and found that rudeness hurts AI performance—but excessive flattery isn’t magic. Here’s what that means for your everyday AI use.

Christopher J

9/9/20254 min read

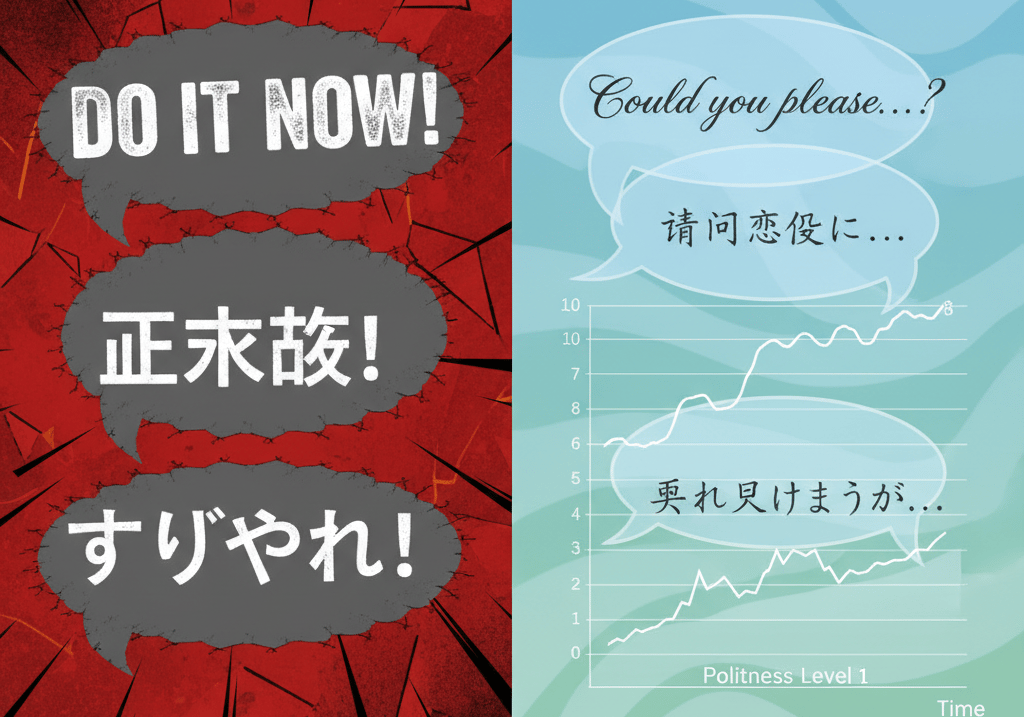

If you’ve ever typed “PLEASE” into a chatbot like you’re negotiating with a tiny god, you’re not alone. A new paper—delightfully titled “Should We Respect LLMs?”—asked a simple, human question: does the tone of our prompt change how well large language models (LLMs) perform? Short answer: yes, tone matters—but not in a neat straight line. The researchers tested eight politeness levels (from very polite to extremely rude) across English, Chinese, and Japanese, then measured how models did on summarization, academic-style quizzes, and bias tests. Their big picture: impolite prompts often made models worse, but laying it on too thick didn’t always help. The “best” tone depends on the language and the model.

WHAT THE RESEARCHERS DID

The team designed eight graded prompt styles per language and validated those styles with native speakers to be sure “polite” really felt polite (and “rude” felt rude) in each culture. Then they ran three tasks: (1) summarization, (2) multiple-choice knowledge tests (MMLU for English, C-Eval for Chinese, and a newly built Japanese benchmark called JMMLU), and (3) a new Bias Index test that flags when models treat matched sentence pairs differently (a sign of stereotyping).

KEY FINDINGS (IN PLAIN LANGUAGE)

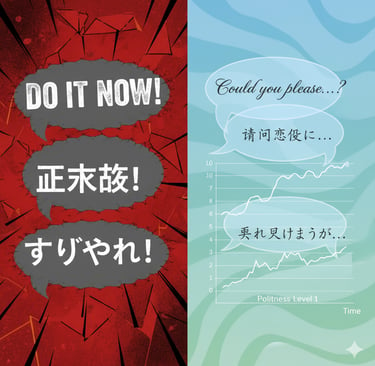

Rudeness hurts—usually. Prompts at the most impolite level tended to drag down scores or trigger refusals. For example, English GPT-3.5 fell from 60.0 at very polite to 51.9 at the rudest level on MMLU; GPT-4 was steadier but still worse when you went impolite.

Extra-polite isn’t automatically better. In Chinese, being overly formal sometimes reduced accuracy for both GPT-3.5 and GPT-4—likely because exam-style questions in that language aren’t phrased politely, so the “vibe” of the prompt can miscue the model.

Language and culture change the sweet spot. Japanese results were quirky: many models did fine at lower-politeness levels (except the rudest), and some mid-polite settings yielded longer outputs—possibly echoing how Japanese service speech often stays honorific regardless of the customer’s tone.

Bias moves with tone. Using a simple Bias Index, the authors found GPT-4’s lowest overall bias around a moderately polite level, while extreme rudeness could increase bias or trigger refusals (which is its own kind of failure). English GPT-3.5 showed high bias around a mid-polite level too; in Chinese and Japanese, patterns shifted but still showed tone-dependent swings.

“Aligned” vs “base” models react differently. Comparing Llama 2’s chat model (with RLHF—reinforcement learning from human feedback) to its base model, the chatty, aligned version was more sensitive to impoliteness and showed a crater at the rudest level; the base model’s scores rose more smoothly with politeness and didn’t collapse at the bottom. RLHF also reduced bias overall, though cranking politeness to the max didn’t always lower bias further.

WHY LANGUAGE AND CULTURE MATTER

Politeness isn’t universal. The paper sketches how English, Chinese, and Japanese encode respect differently (think English courtesy, implicit cues in Chinese, and Japan’s formal keigo system). Because LLMs soak up human text, they also absorb these cultural norms—so the same “tone slider” yields different effects across languages. Translation: “Just be nice” is too vague for prompt engineering. You need the right kind of “nice” for the language and task.

A FAST TAKEAWAY YOU CAN USE TODAY

• Avoid rudeness. It’s risky for accuracy and bias.

• Aim for clear and moderately polite. “Please do X; give Y format; don’t include Z” tends to be a safer, stronger default—especially in English.

• Fit the tone to the context and language. Ultra-formal in Chinese tests? Not always helpful. Mid-polite in Japanese? Sometimes best. Test a couple levels if the task is important.

A LITTLE HUMAN PSYCHOLOGY BONUS

The authors float a human-parallel: in very polite settings, people relax and speak more freely; in hostile ones, prejudice can surface. LLMs may mirror that pattern because their training data mirrors us. It’s a good reminder that our tools inherit our norms—for better and worse.

WHY THIS MATTERS FOR HEALTH, HABITS, AND RECOVERY

Tone is a habit. In fitness or rehab, the micro-prompts we give ourselves—“Get it done” versus “Let’s try three gentle reps”—change compliance and outcomes. The study’s message rhymes with recovery: respectful, specific cues help you (and your AI) show up consistently without drama. As the creator behind this blog has shared in their own recovery story, progress stacks best when the inner voice is firm but kind.

CITABLE NUGGETS (FOR YOUR NOTES)

• “Impolite prompts often result in poor performance, but overly polite language does not guarantee better outcomes.”

• GPT-3.5 English MMLU: 60.02 (very polite) vs 51.93 (rudest). GPT-4 was steadier but still dipped with rudeness.

• RLHF lowered bias and changed politeness sensitivity; the base model didn’t nosedive at the rudest level.

SUGGESTED SOURCES TO VERIFY OR EXPLORE

• Yin, Wang, Horio, Kawahara, Sekine (2024). “Should We Respect LLMs? A Cross-Lingual Study on the Influence of Prompt Politeness on LLM Performance.” Proceedings of SICon 2024.

• Background cited in the paper: Morand (1996) on politeness and communication; Bailey et al. (2020) on authentic self-expression; Hendrycks et al. (2021) on MMLU; the new JMMLU (Japanese) benchmark details.

CLOSE

Start treating your prompts like coaching cues: respectful, clear, and tuned to the context. Your AI—and your daily habits—will likely perform better when the tone sets you up to succeed. Try a “moderately polite” template in your next session and track the difference. Your brain and your bots will thank you.

Do you have a life changing story and want to help others with your experience and inspiration. Please DM me or Send me an email at the links below

Contact Me

© 2025. All rights reserved.

Privacy Policy